Teams still spend weeks wiring together models, retrievers, and tool calls to build reliable workflows. That slows product launches and adds hidden ops work for users and engineers.

You’ve likely hit brittle integrations, opaque failures, and slow iteration cycles—especially when a visual editor promises speed but forces code-level fixes. That gap matters now that Workday acquired the project; enterprise stakes are higher.

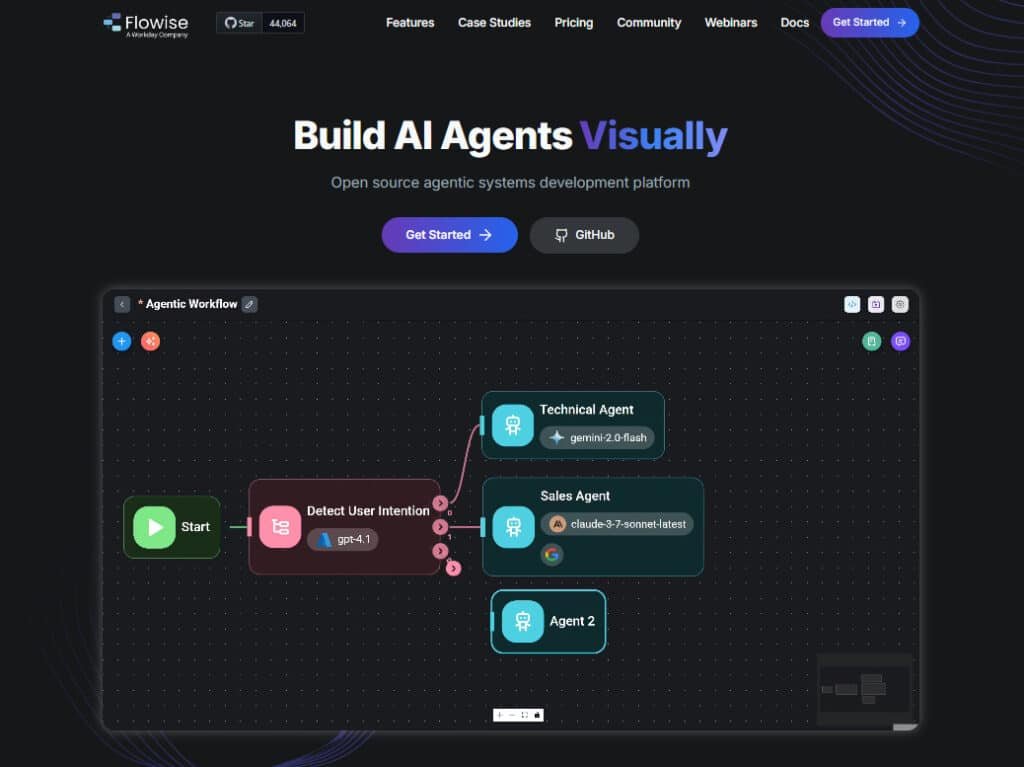

I’ve used this open-source, low-code visual builder that turns LangChain components into connectable blocks. It simplifies development for multi-agent and RAG applications, and flowise offers features like Agentflow, Chatflow, HITL checkpoints, and observability with Prometheus and OpenTelemetry.

I’ll walk you through features, pricing (including the free tier with 2 flows and 100 predictions), pros and cons, alternatives, and a short personal case study. Expect pragmatic notes on where the interface speeds builds and where debugging still needs work.

Bottom line: If you want faster orchestration of real production workflows and sane integrations with LLMOps tools, this platform is worth testing. Let’s dive in.

Key Takeaways: Flowise AI Review

- The visual editor speeds initial development of workflows and applications.

- Agentflow and Chatflow shine for orchestration and RAG use cases.

- Observability and HITL checkpoints improve production reliability.

- Debugging depth lags some peers—expect basic If/Else control today.

- Free tier (2 flows, 100 predictions) lets users test before scaling.

Flowise AI Review: An Overview

Raamish’s Take

Flowise AI shines as an open-source low-code platform for building AI agents and LLM orchestration flows with a drag-and-drop interface. It supports over 100 large language models, embeddings, and vector databases, perfect for creating chatbots or multi-agent systems.

I like its rapid prototyping ability. You can build conversational agents with memory, add RAG for file retrieval (PDFs, CSVs), and include human-in-the-loop reviews. Observability tools like Prometheus help track performance easily.

It offers APIs, SDKs (TypeScript, Python), and embeddable chat widgets for seamless integration. Companies like Publicis Groupe use it to embed AI, reducing deployment efforts.

In my view, Flowise simplifies custom AI development, ideal for teams avoiding complex coding. It focuses on innovation over infrastructure.

Overall, it’s a reliable tool with strong community support.

Introduction to Flowise AI

The low‑code landscape has matured, and this platform sits at the intersection of visual builders and open‑source orchestration.

I’ve seen a surge in low‑code LLM development platforms that let non‑engineers ship assistants and agents quickly. This project leans into that trend by turning LangChain pieces into connectable blocks for faster development of practical applications.

Where it fits in the Current landscape

This tool ranks with other open‑source platforms that make visual composition of workflows accessible to both engineers and citizen builders. It’s a natural fit when teams need rapid iteration and self‑hosting for compliance.

Founders, YC roots, and the Workday context

The project began with Henry Heng (CEO) and Chung Yau Ong and joined YC’s S23 batch. On August 14, Workday acquired the project to strengthen enterprise automation—especially in HR and finance—while keeping an eye on community commitments.

Who uses it today

Reported users range from indie makers shipping document‑grounded chatbots to ops teams building internal copilots. Enterprises adopt self‑hosting for governance, and an active GitHub community helps grow templates and components.

- Indie builders: fast MVPs and RAG prototypes

- Ops teams: internal assistants and workflow automation

- Enterprises: regulated deployments with on‑prem needs

What is Flowise AI?

This Flowise AI system is an open-source, low‑code orchestration layer that turns LangChain pieces into draggable nodes. I find that approach removes a lot of setup friction. You still control the details, but you no longer wrestle with every connector in raw code.

Open-source, low‑code orchestration for LangChain components

The platform exposes components—prompts, memory, retrievers, vector DBs, and tool calls—as visual blocks. You wire those blocks into a clear workflow, then run flows that execute multi-step logic for large language tasks.

Agentflow coordinates multiple specialized agents to handle complex pipelines. Chatflow builds single-agent assistants with retrieval-augmented generation for document-grounded responses.

How it benefits developers, citizen builders, and ops teams

For developers, this cuts time to prototype and ship llm applications—less boilerplate, more iteration. I used the canvas to assemble a RAG assistant in minutes.

Citizen builders get a safer on-ramp: visual nodes reduce accidental misconfigurations and make customization obvious. Ops teams gain logs, tracing (Prometheus/OpenTelemetry), and human-in-the-loop checkpoints for quality gates.

- Faster development: assemble components instead of coding glue logic.

- Governance: observability and HITL checkpoints for production readiness.

- Secure execution: code runs in an E2B sandbox when heavy processing is needed.

Let’s cut to the essentials: what changed in current AI landscape, why it matters, and how this platform maps to current agent trends. I’ll keep it short and practical so you can decide whether to test it in your stack.

What’s new and notable right now

The biggest update is enterprise alignment after the Workday acquisition—expect tighter focus on governance and compliance. Agentflow now supports clearer multi‑agent coordination and Chatflow continues to power RAG‑style assistants.

Key additions include HITL checkpoints, execution traces, and hooks for Prometheus and OpenTelemetry. An embeddable chat UI and code‑baked marketplace templates speed development when you want repeatable components.

How the platform aligns with agent trends

This tool follows where the community standardizes: multi‑agent systems for task specialization and single‑agent RAG for document grounding. Its capabilities favor orchestration and production readiness more than flashy prototyping.

I’ll be candid: the interface and components feel denser than some peers, and native debugging remains basic (If/Else only). Still, self‑hosted performance is solid for most users, while cloud options have lagged.

- Why try it: strong orchestration, governance features, and marketplace momentum.

- Watchouts: steeper learning curve and lighter debugging than Dify or Langflow.

Best Features of Flowise AI

Below I highlight the tools and controls that saved me time when turning prototypes into production systems. This section hits the practical value: how each capability works, why it matters, and when to pick it.

1. Iterate Fast: The Core Building Blocks

Flowise empowers users to create complex workflows without coding expertise. Its modular drag-and-drop interface lets you snap together components to build complex workflows, from simple scripts to autonomous agents.

Supporting diverse file types like PDF, CSV, and SQL, it simplifies data integration for llm-driven applications. Install via $ npm install -g flowise and start instantly. This feature saves users time, enabling rapid prototyping of AI systems.

For users seeking efficiency, it streamlines creation, reducing development cycles compared to traditional coding, making it ideal for startups or teams needing quick, scalable llm solutions.

2. Agentflow: Multi-Agent Magic

Agentflow enables users to orchestrate complex workflows across multiple coordinated agents using llm capabilities.

Each agent can handle distinct tasks, like research or summarization, within a single system. This distributed approach enhances efficiency for intricate applications on various platforms.

The integration with over 100 language models ensures flexibility in tailoring workflows. For users aiming to automate multi-step processes, Agentflow’s orchestration saves hours by automating task delegation.

Businesses benefit from faster delivery of AI-driven solutions, boosting productivity without needing extensive coding or multiple platforms, making it a go-to for scalable, collaborative llm projects.

3. Chatflow: Single-Agent Chatbots

Chatflow lets users build single-agent chatbots with robust llm capabilities, including tool-calling and retrieval-augmented generation (RAG).

It pulls data from diverse sources like documents or databases, enabling seamless integration into applications.

This feature supports platforms like web or mobile, offering tailored language processing for customer-facing bots. Users looking to enhance customer support or automate inquiries will find Chatflow’s RAG invaluable for quick, context-aware responses.

It reduces manual setup time, ensuring users can deploy smart chatbots rapidly, improving engagement without deep technical skills, perfect for small businesses or solo developers needing efficient llm solutions.

4. HITL: Human in the Loop

Human in the Loop (HITL) allows users to review and refine llm-driven tasks within workflows.

This feature ensures accuracy by integrating human oversight into automated systems, ideal for compliance-heavy sectors like healthcare or finance. It supports platforms requiring precision, such as regulatory reporting apps.

For users prioritizing quality control, HITL minimizes errors in llm outputs, enhancing trust in AI applications. By enabling human feedback, it balances automation with reliability, helping businesses maintain high standards without sacrificing speed.

This makes it a critical tool for organizations needing robust, error-free AI-driven processes.

5. Observability: Execution Traces

Flowise’s observability feature provides full execution traces, integrating with tools like Prometheus and OpenTelemetry.

This allows users to monitor llm performance across platforms, ensuring transparency in app operations. By tracking every step, it simplifies debugging and optimization of complex workflows.

Users aiming to maintain reliable AI systems will find this feature crucial for identifying bottlenecks or errors. It supports seamless integration with existing monitoring tools, reducing downtime and improving performance.

For developers or enterprises, this capability ensures llm applications remain efficient and scalable, offering clear insights into operational health without complex setups.

6. Developer Friendly: Embed and Extend

Flowise’s developer-friendly tools include APIs, TypeScript/Python SDKs, and embedded chat widgets, enabling easy integration into apps.

Users can extend llm capabilities with minimal coding, embedding branded chatbots into websites or platforms. This flexibility supports rapid creation of custom solutions.

For users seeking to enhance existing software, these tools simplify adding AI features without overhauling systems. APIs and SDKs ensure compatibility with diverse tech stacks, while embedded widgets boost user engagement.

This makes Flowise ideal for developers needing scalable, customizable llm solutions without extensive development resources.

7. Enterprise Ready: Production Scale

Flowise’s enterprise-grade infrastructure supports on-prem or cloud deployment, scaling with message queues and workers. It integrates over 100 llm, embeddings, and vector DBs, offering unmatched flexibility.

The paid plans, like Pro and Enterprise, unlock higher prediction limits and features like SSO and audit logs. For users managing large-scale AI deployments, this ensures robust, secure systems.

Horizontal scaling handles high workloads, while air-gapped options suit regulated industries. Enterprises benefit from 99.99% uptime and personalized support, making Flowise a reliable choice for mission-critical llm applications requiring seamless performance and compliance.

8. Community and Learning

Flowise’s open-source community, with 12,000 GitHub stars, offers templates for llm-powered apps like conversational agents or PDF chatbots.

Webinars with partners like SingleStore and Milvus teach no-code RAG and SQL bot creation. An affiliate program offers 20% commission, encouraging community growth.

For users exploring AI development, these resources lower the learning curve, providing practical guides to build and scale applications.

Community support and tutorials ensure users can master llm tools quickly, making Flowise accessible for beginners and pros alike, with real-world examples to inspire innovative projects.

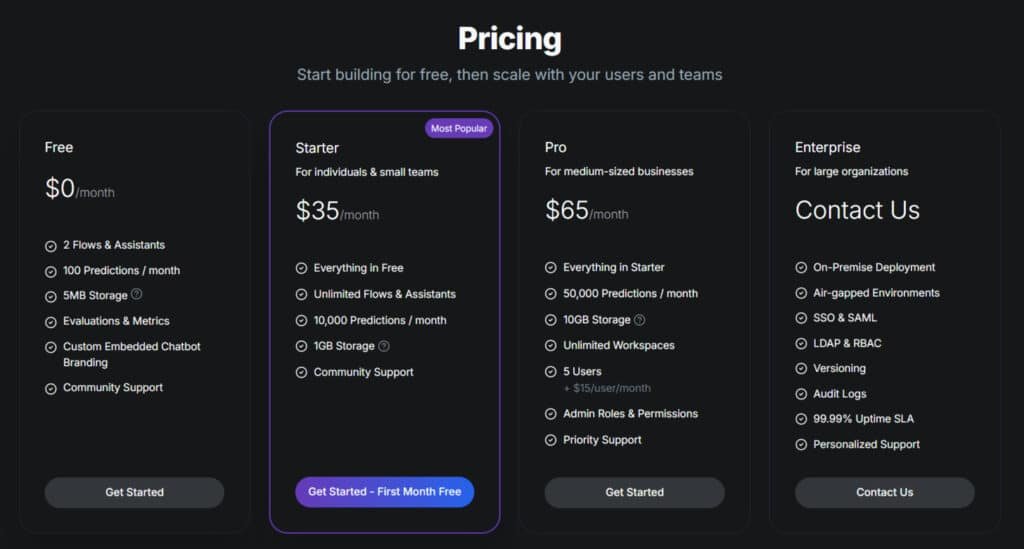

Pricing Plans of Flowise AI

Costs matter—here’s a practical, no‑fluff breakdown of what each plan unlocks and when you should move from free testing to paid plans.

Free tier: getting started with flows and predictions

The free plan is $0/month and is great for hobby projects or quick tests. It includes 2 flows and 100 predictions, so you can validate ideas without risk.

Use this tier to prototype ingestion, basic RAG setups, and initial UI embeds before you invite other users or increase traffic.

Starter and Pro plans: scaling teams, roles, and prediction volume

Starter — $35/month: Unlimited flows & assistants, 10,000 Predictions / month, sensible for small teams running pilot projects. You’ll usually upgrade when prediction usage or concurrent users outgrow the free quota.

Pro — $65/month: Adds team access, admin roles, and higher prediction volume. Pick this when multiple contributors need controlled access and you standardize the platform across projects.

Enterprise options: compliance, governance, and deployment flexibility

Enterprise pricing is custom (contact sales). This tier focuses on governance features—SSO, audit logs, strict role controls, and private or on‑prem deployment options.

If your work sits in HR, finance, or other regulated domains, Enterprise gives the deployment controls and compliance checklists IT expects.

- Quick decision guide: Free for prototypes; Starter for first pilots; Pro when teams scale; Enterprise for audited deployments.

- How access changes: Roles, permissions, and deployment options increase with each paid plan—plan for who needs admin rights and who only needs runtime usage.

Pros & Cons

Here’s a clear look at what works well and where you’ll hit friction when building production workflows.

Pros

Speed to value: I’ve seen teams move from idea to a working RAG assistant quickly, thanks to the visual builder and Agentflow patterns.

Enterprise controls: HITL checkpoints, tracing, and role‑based workflows give strong governance and operational support.

Feature set: Integrations with LLMOps tools, an embeddable UI, good customization, and stable self‑hosting make this a reliable platform for serious users.

Cons

Learning curve: Complex flows get tricky—expect some ramp time when you chain many nodes.

Debugging and logic limits: Native debugging is lighter than peers (Dify, Langflow) and logic tops out at If/Else, so edge cases can slow iteration.

Marketplace and community are still maturing and cloud launches have lagged, which affects third‑party support and templates.

Quick summary: who will like this

“If you value orchestration-first capabilities and enterprise-grade controls, this platform rewards operational teams more than quick prototypers.”

In short: product and ops teams who need strong orchestration, observability, and predictable performance will be happiest. If deep experiment tracking, loop logic, or rich debugging are mission‑critical, scan alternatives before you commit to this tool.

Integrations, Deployment, and Security

Real-world projects live or die on clean integrations, sensible deployment, and clear access controls. I’ve wired document sources, databases, and vector stores so responses stay accurate. You can ingest PDFs, DOCs, CSVs, and SQL and link retrievers to common vector databases for robust RAG pipelines.

Data sources, tools, and vector databases

I connect file ingestion, database queries, and retrievers to a vector DB (Pinecone, Weaviate, or an open-source vector store). This keeps search fast and maintainable.

Tools and integrations: LLMOps hooks (LangSmith, LangFuse, Lunary, LangWatch), embeddable chat UI, and retriever plugins make the stack easier for developers and users.

Self-hosting, observability, and deployment

For control I prefer self-hosted Docker or Kubernetes. That gives predictable deployment patterns and easier ops support.

Observability: Prometheus and OpenTelemetry provide traces and metrics. Those help you spot regressions before customers notice.

Access control and regulated teams

Access and role management matter. I separate staging and production, lock credentials in a secrets manager, and use SSO for customer-facing embeds.

For regulated systems choose private or on‑prem deployment, audit logs, and strict role-based control to satisfy compliance reviews.

| Area | Recommended Options | Why it matters | Who owns it |

|---|---|---|---|

| Data ingestion | PDF/DOC/CSV/SQL + retrievers | Keeps RAG responses grounded and auditable | Developers / Data Engineers |

| Deployment | Docker / Kubernetes (on‑prem) | Predictable scale, compliance-friendly | Platform / Ops |

| Observability & Access | Prometheus, OpenTelemetry, SSO, RBAC | Traceability, audit logs, and least-privilege control | Security / IT |

Performance, Debugging, and Stability

When a flow misbehaves, execution traces are the first place I look to diagnose what went wrong. Tracing gives a timeline of each node, which helps you isolate slow steps and failing calls.

Execution tracing in practice

I use traces to step through a workflow and verify inputs and outputs at each node. The platform provides basic execution traces and observability hooks that connect to Prometheus or OpenTelemetry.

Practical note: these traces are helpful, but lighter than Dify’s deep run histories and nested sub-workflow logs. Dify keeps a detailed record of runs, which speeds root-cause analysis for complex multi-step flows.

Compared to Langflow, you get fewer in-canvas debug affordances. Langflow shows per-node timings and IO peeks that make isolating brittle code faster during iteration.

Stability in self-hosted deployments

In my Azure deployments the platform held steady for long-running workflows after proper provisioning. Once you set resources and logging, stability is solid for production use.

That said, native debugging is limited. When code goes sideways I lean on external logs and LLMOps tools for deeper context. Plan to instrument early, capture parameters, and test sub-paths so you can reproduce failures outside the visual canvas.

- Developer workflow: instrument, run narrow tests, and push telemetry to an external store.

- Migration tip: teams moving from Dify or Langflow should expect different interface trade-offs and add external tracing to fill gaps.

“Instrument early and keep logs handy—observability makes the difference between a quick fix and a stalled rollout.”

Alternatives to Flowise AI

If the platform’s limits—debugging depth, interface quirks, or customization needs—slow you down, other platforms and tools can fill those gaps. Below I list practical alternatives and when to pick each one.

Langflow

I recommend Langflow when you want a beginner‑friendly interface that still lets developers tweak component code in‑platform. It’s great for rapid prototyping and experiments that need light customization.

Dify

Dify shines if debugging and iteration are your top priorities. Its run histories, nested workflow logs, and concise components help teams ship with confidence.

Voiceflow

Choose Voiceflow for best‑in‑class conversational UX. It excels at front‑end design for chat and voice and pairs well with backend orchestration tools for production apps.

n8n

Think of n8n as the automation glue—routing leads, triggering Slack messages, and syncing CRMs—while another tool handles the “smart” parts of your applications.

Microsoft Copilot Studio

For Microsoft‑centric shops, Copilot Studio offers a tight enterprise fit: governance, SSO, and familiar ecosystem controls for regulated deployments.

| Product | Strength | Best for | Notes |

|---|---|---|---|

| Langflow | Easy prototyping + code tweaks | Developers building demos | Good customization, growing community |

| Dify | Deep debugging & run history | Teams needing robust iteration | Strong component model, fast testing |

| Voiceflow | Conversational UX designer | Customer‑facing chat/voice | Pair with backend orchestration |

| n8n | Workflow automation | CRM/ops integrations | Complements agent backends well |

| Copilot Studio | Enterprise ecosystem | Microsoft‑standard orgs | Governance and compliance focus |

How to choose: match your top constraint—debugging, UX, or enterprise fit—to the right platform. Often a hybrid stack (voice or automation front end plus a backend orchestration tool) wins more use cases than any single option.

Case Study / Personal Experience of Flowise AI

I built two production projects that turned internal documents into practical applications. One became a document‑grounded support assistant for customers. The other served as an internal copilot that pulls SOPs and policies for staff.

Real‑world results: from document‑grounded support to internal copilots

For the customer support project we ingested PDFs and SQL exports, connected retrievers, and tuned prompts so answers stayed grounded in the company data.

We added a human review checkpoint before live replies. That saved us from embarrassing mistakes and improved trust with early users.

Outcomes: faster first response times, broader document coverage, and fewer escalation tickets.

My experience: building a multi‑step agent with RAG and human review

I used a multi‑step agent approach: one agent retrieves, another summarizes, a third drafts the reply. Agentflow kept the workflows organized and testable.

I wrote custom code in a sandboxed step to parse complex tables. Heavy processing lived outside the main path to keep latency low.

Debugging pushed me to external LLMOps hooks and careful logging—effective, but I missed deeper in‑canvas run histories during fast iteration.

- Development highlight: rapid assembly of RAG pipelines reduced initial build time.

- Operational note: HITL checkpoints caught edge cases before customers saw them.

- Next time: predefine error branches to cut re‑runs and speed incident handling.

| Goal | Approach | Result |

|---|---|---|

| Customer support bot | PDF/SQL ingestion + retrievers + HITL | Lower response time, fewer escalations |

| Internal copilot | Multi‑agent RAG with summarization | Faster staff answers, higher SOP coverage |

| Development practice | Sandbox code steps + external tracing | Stable self‑hosted runs; lighter native debug |

“Human checkpoints and modular agents turned a brittle prototype into a reliable system.”

Conclusion

Raamish’s Take

Flowise AI shines as an open-source low-code platform for building AI agents and LLM orchestration flows with a drag-and-drop interface. It supports over 100 large language models, embeddings, and vector databases, perfect for creating chatbots or multi-agent systems.

I like its rapid prototyping ability. You can build conversational agents with memory, add RAG for file retrieval (PDFs, CSVs), and include human-in-the-loop reviews. Observability tools like Prometheus help track performance easily.

It offers APIs, SDKs (TypeScript, Python), and embeddable chat widgets for seamless integration. Companies like Publicis Groupe use it to embed AI, reducing deployment efforts.

In my view, Flowise simplifies custom AI development, ideal for teams avoiding complex coding. It focuses on innovation over infrastructure.

Overall, it’s a reliable tool with strong community support.

After testing the platform in real projects, here’s a concise recommendation for teams deciding whether to pilot it. The tool blends visual speed with enterprise controls, making it easy to build RAG assistants and multi‑agent applications without starting from code.

I find that flowise offers a sensible balance: fast iteration for builders and governance for ops. If you value production‑grade observability and HITL gates, this platform will meet many of your needs.

For developers who need deep experiment tracking, try Dify alongside it. If prototyping with code tweaks matters, add Langflow. Start on the free tier, reproduce one high‑value workflow, add HITL at risk points, and connect LLMOps from day one.

Action: spin up a pilot this week—define clear metrics and decide within 14 days if the platform fits your long‑term stack.

Frequently Asked Questions

What is Flowise and who should consider using it?

Flowise is an open-source, low-code orchestration platform designed to visually build workflows and AI agents using LangChain components. I recommend it if you’re a developer, product lead, or citizen builder who wants rapid prototyping with control — especially for RAG-based assistants, multi-agent systems, or teams that prefer self-hosting and extensibility.

How does Flowise compare to Langflow, Dify, and Voiceflow?

Flowise focuses on visual orchestration with strong support for multi-agent flows and RAG patterns. Langflow is more beginner-friendly for prototypes and code-level tweaks; Dify excels at debugging and sub-workflow logging; Voiceflow targets conversational UX and voice experiences. Choose based on your need for debugging, UX polish, or deployment control.

Is Flowise suitable for enterprise deployments and regulated environments?

Yes — Flowise supports self-hosting and on-prem deployments, role-based access controls, and observability integrations (Prometheus, OpenTelemetry). For regulated teams you’ll want to evaluate compliance needs, logging practices, and data residency as part of your deployment plan.

What are the main features that speed up development?

The visual workflow builder, templates and marketplace, Chatflow for single-agent assistants, Agentflow for multi-agent systems, HITL checkpoints, and built-in integrations with vector databases and LLMOps tools accelerate delivery and iteration.

What’s the learning curve like?

There’s a modest learning curve when you move beyond basic chatflows to complex nested workflows and conditional logic. I find the visual interface helps, but advanced flows require familiarity with LangChain concepts and debugging practices.

How does Flowise handle observability and debugging?

It offers execution tracing, Prometheus and OpenTelemetry support, and logs for flows. Native debugging is improving, but some peers still offer deeper step-level debug tooling — so expect to combine built-in traces with external monitoring for production systems.

What integrations and data sources are supported?

Flowise integrates with common vector databases, external tools, webhooks, and third-party LLMOps providers (LangSmith, LangFuse, Lunary, LangWatch). That makes it straightforward to connect document stores, APIs, and tools used in real-world workflows.

Can I run code safely inside flows?

Yes — Flowise supports code execution nodes, but sandboxing and security depend on your deployment. For sensitive environments I advise strict sandboxing, network restrictions, and thorough review of execution policies before enabling code nodes.

What are the pricing and plan options?

There’s a free tier suitable for getting started with flows and predictions. Paid tiers (Starter, Pro) scale teams, roles, and prediction volume, while enterprise plans add compliance, governance, and deployment flexibility. Exact pricing varies — check the official site for current tiers and limits.

How active is the community and marketplace?

The community is growing — you’ll find templates, marketplace momentum, and third-party contributions that speed up common use cases. Community support is useful for templates, troubleshooting, and sharing best practices.

Which use cases work best with Flowise?

It shines for document-grounded support assistants, internal copilots, multi-step automation with human review, and prototypes that need quick iteration plus deployment control. If you need highly polished conversational UX or voice-first features, consider complementary tools.

What are the main limitations to be aware of?

Expect a steeper climb for very complex flows, limited native debugging compared to some competitors, and the need to manage observability and security in self-hosted setups. You’ll trade some out-of-the-box polish for flexibility and control.

How do LLMOps integrations improve workflow management?

Integrations with LLMOps providers enable model telemetry, prompt and chain tracing, and performance monitoring — which helps you tune prompts, track hallucinations, and maintain model governance across projects.

Is it easy to embed a chat UI or integrate Flowise into an app?

Yes — Flowise offers front-end options including embeddable chat UI and APIs that let you integrate assistants into web apps, knowledge bases, and internal tools. You may need frontend work to match branding and UX requirements.

What should I test before choosing Flowise for production?

Test stability under realistic load, execution tracing fidelity, role-based access and audit logging, vector DB integrations, and how well your HITL processes work. Also validate deployment automation and backup/restore for your chosen hosting model.