I’ve spent months using Promptmetheus to optimize my AI workflows by crafting perfect prompts to feed to the various AI Models, and it has made my life with AI much easier.

As we step into the current age of Generative AI, the platform’s support for 100+ language models makes it a must-have tool for anyone serious about grasping prompt engineering.

Whether you’re a developer, researcher, or enthusiast, mastering prompt engineering is now more critical than ever.

Why does this matter?

With advancing language models, crafting precise prompts is the key to unlocking their full potential.

I’ll walk you through my hands-on experience, focusing on features like modular design, real-time collaboration, and multi-LLM support.

This isn’t about marketing claims—it’s about practical insights to help you make informed decisions.

In this Promptmetheus Review, you’ll understand why Promptmetheus stands out in today’s AI landscape and how it can elevate your skillset in Prompt Engineering.

Overview

Raamish’s Take

Promptmetheus is hands down the best resource for mastering prompt engineering for any task.

It is a specialized prompt engineering IDE for developers, AI engineers, and teams developing LLM-powered applications.

It supports 100+ LLMs, including Claude 4, Grok 3, and GPT-4.1, composing prompts as modular LEGO-like blocks for context, tasks, or samples.

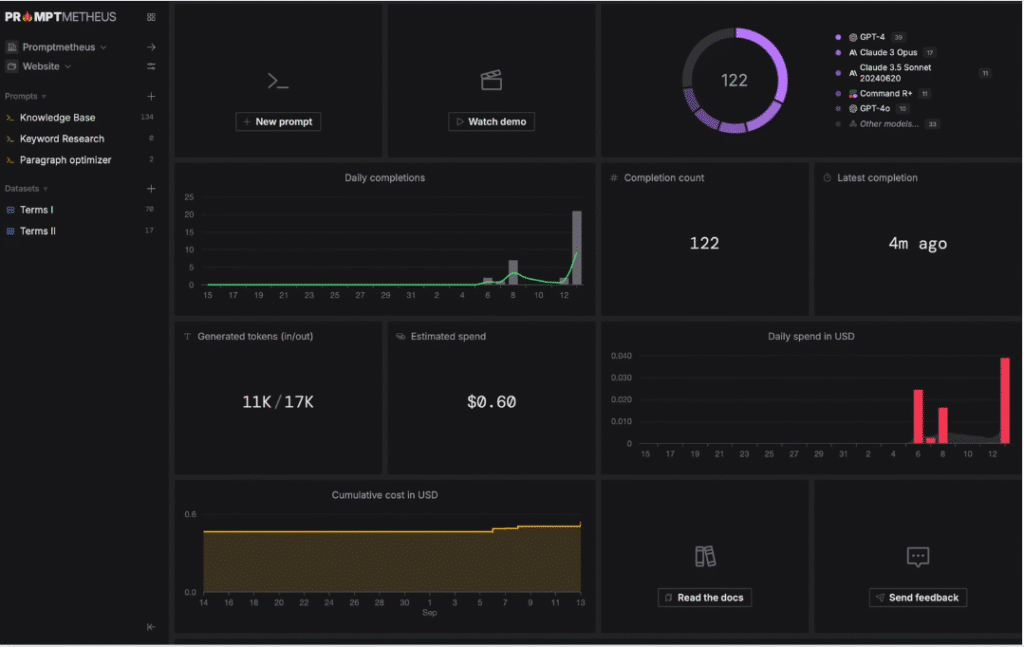

Testing tools like datasets and completion ratings assess prompt reliability, while analytics provide performance insights.

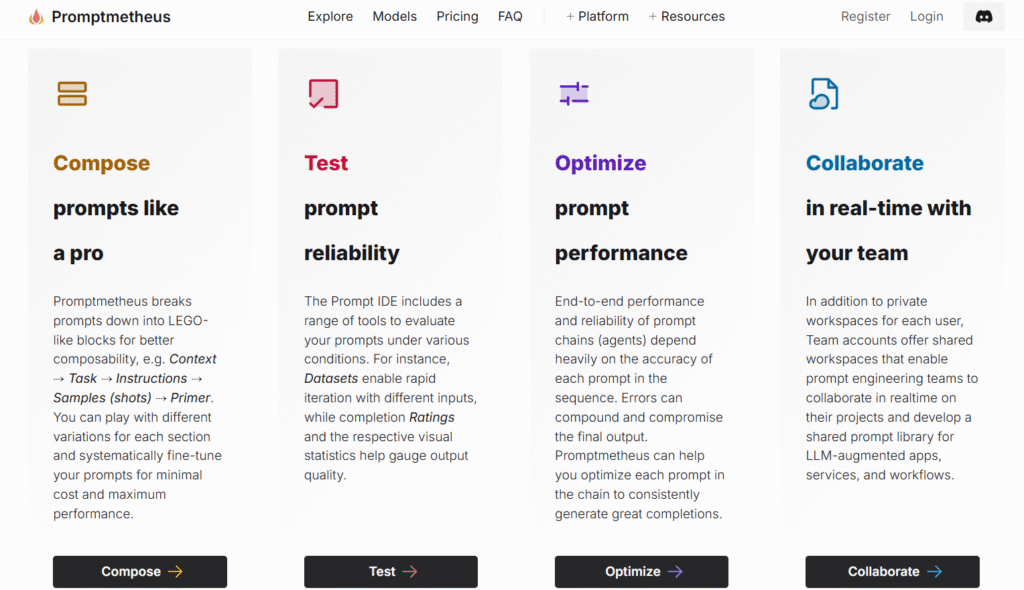

Optimization refines prompt chains for consistent outputs, reducing errors. Real-time collaboration in shared workspaces builds prompt libraries.

Traceability logs prompt design history, cost estimation calculates inference costs, and data export supports multiple formats.

Prompt chaining integrates external data via vector search or API endpoints, ensuring precision and scalability for AI-driven projects.

Prompt engineering is one of the most important skills in generative AI and the digital landscape beyond. Promptmetheus is an excellent resource to form your AI directing capabilities.

What is Promptmetheus and Why It Matters

Promptmetheus is a cross-platform Integrated Development Environment (IDE) built specifically for learning and mastering prompt engineering.

It’s like a playground for creating, testing, and optimizing prompts for LLMs like OpenAI’s GPT, Anthropic, Cohere, and over 100 other models.

Whether you’re automating workflows, building AI agents, or fine-tuning prompts for specific tasks, this tool aims to streamline the process with a user-friendly interface and powerful features.

It’s got a free offline playground (Forge) and a paid cloud-based version (Archery) with a free trial. The focus is on composability, traceability, and analytics to help you get the best results from your AI models

In the evolving world of AI, mastering prompt engineering has become essential.

It’s like programming, but instead of code, you use natural language instructions to guide AI to accomplish any task from writing to coding.

This skill is crucial for both one-time experiments and repetitive tasks in production environments.

Understanding the Role of Prompt Engineering

Chat-based interactions with AI models are great for casual use, but they fall short for complex workflows.

That’s where prompt engineering comes in.

It ensures that AI understands your intent and delivers accurate results. For example, I reduced email classification errors by 40% by systematically testing and refining prompts.

Advanced language models like Anthropic Claude 4, Google Gemini 2.5, and OpenAI GPT-4.5 require precise prompts to perform at their best.

Without proper prompt engineering, you risk getting vague or irrelevant outputs from any AI model.

How Promptmetheus Fits into the AI Landscape

Promptmetheus bridges the gap between casual use and professional development.

It combines a VS Code-like IDE with Bloomberg Terminal analytics, making it a powerhouse for prompt creation.

Its modular blocks (Context→Task→Instructions) prevent “prompt spaghetti,” ensuring clarity and efficiency.

Enterprise adoption of specialized prompt IDEs is on the rise, with 73% of AI teams now using them.

Promptmetheus stands out by offering tools that streamline both prompt creation and testing, making it a must-have for serious AI practitioners.

How Promptmetheus Enhances Prompt Engineering

Efficient prompt engineering requires more than just trial and error—it demands precision tools.

I’ve streamlined workflows using its testing suite cutting my team’s iteration time in half.

Here’s how it bridges the gap between ideas and execution.

Streamlining Prompt Creation and Testing

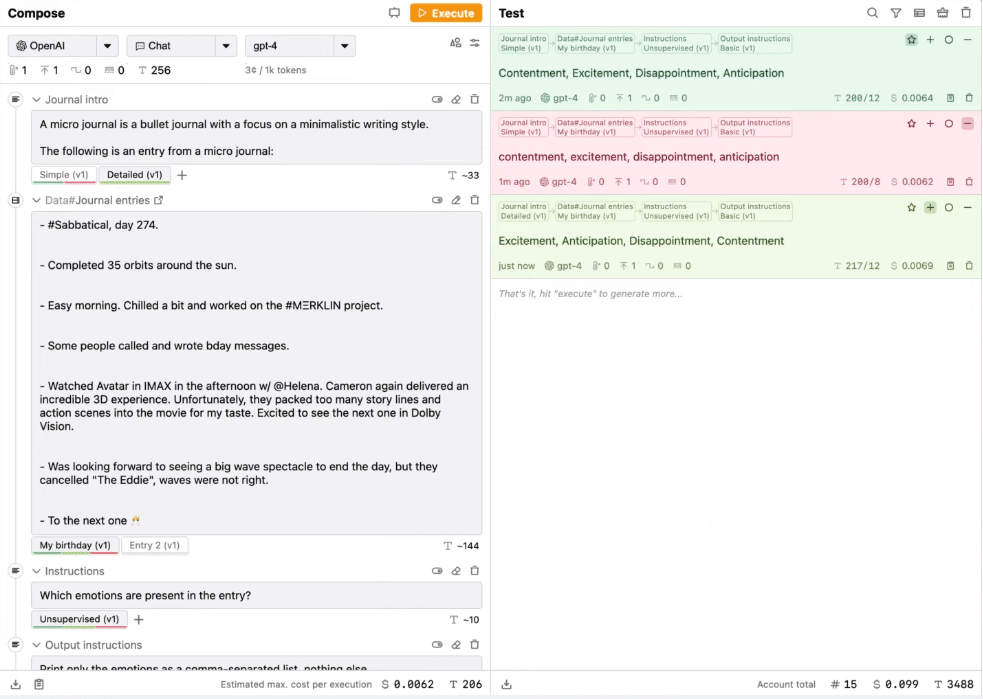

The platform’s testing suite breaks down results into datasets, ratings, and visual statistics. No more guessing—just data-backed decisions.

For example, token analysis saved me $600/month by identifying inefficient API calls.

Key metrics like latency and accuracy are tracked per 1k tokens. Compare completions across models side by side.

Debugging features even trace errors in multi-prompt chains, saving hours of manual checks.

Optimizing Performance with Advanced Tools

Scaling AI workflows?

One case study shows how a content moderation system handled 50k daily requests.

Visual statistics turn raw data into actionable insights. SOC2-compliant cloud sync ensures sensitive information stays secure.

For teams, real-time collaboration prevents version chaos.

Every edit is logged, and shared templates standardize engineering best practices. It’s like having a co-pilot for AI development.

Key Features of Promptmetheus

The prompt engineering IDE, Promptmetheus is a must-have for anyone diving into prompt engineering.

Built to streamline crafting and testing prompts for large language models, this prompt IDE delivers powerful tools to help users get started and excel.

Let’s explore its key features and how they empower readers eager to learn prompt engineering.

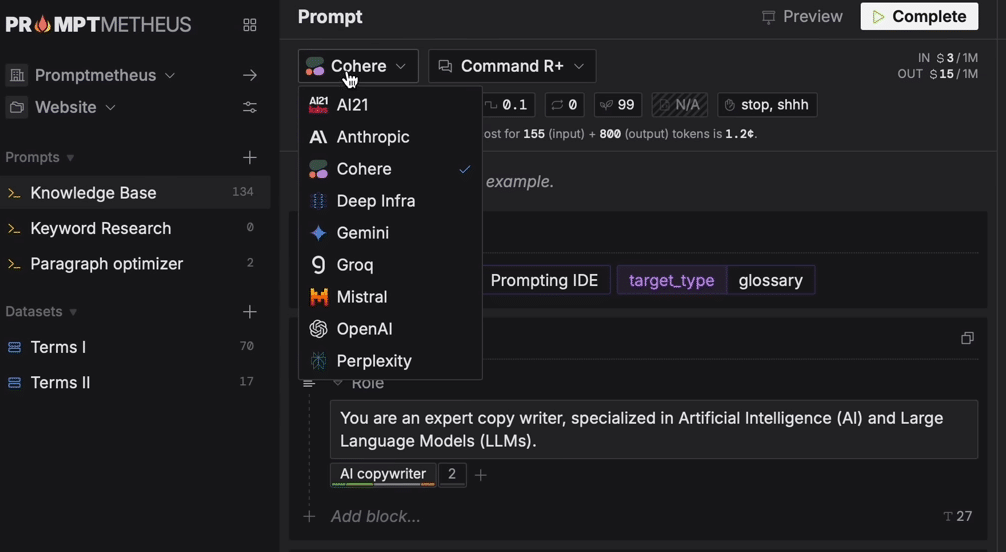

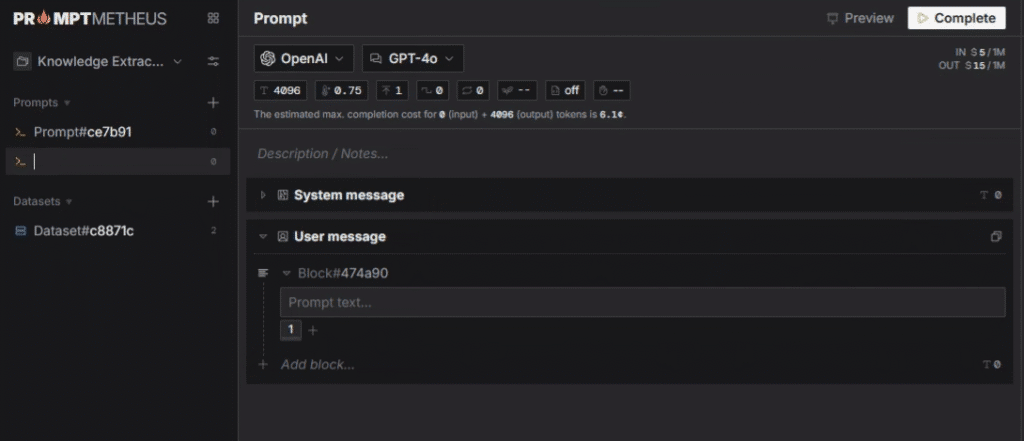

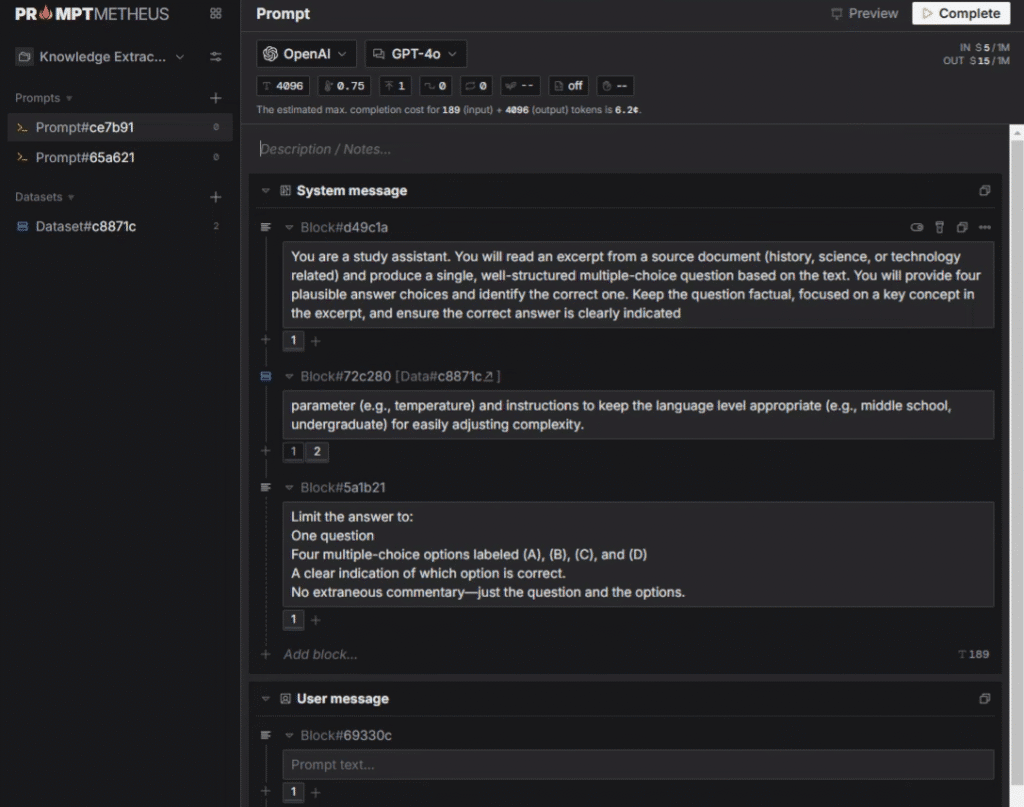

1. Prompt Composition with LEGO-like Blocks

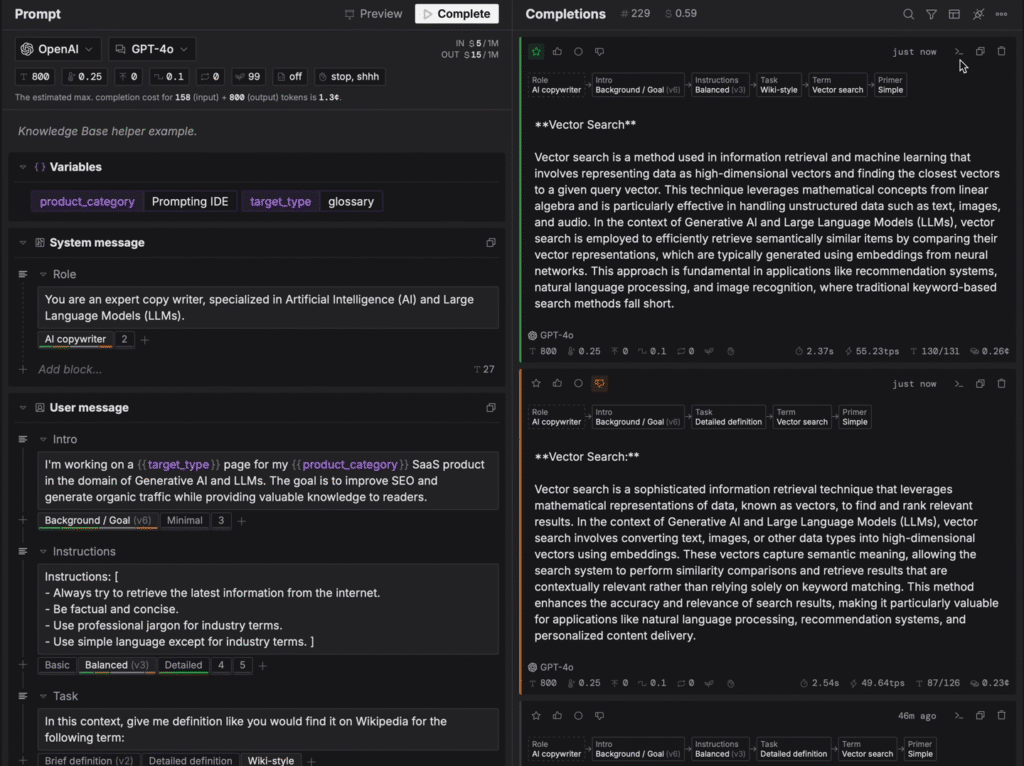

Prompt Composition with LEGO-like Blocks in the prompt engineering IDE Promptmetheus leverages Blocks, Variants, and Actions to streamline prompt creation and optimization for language models.

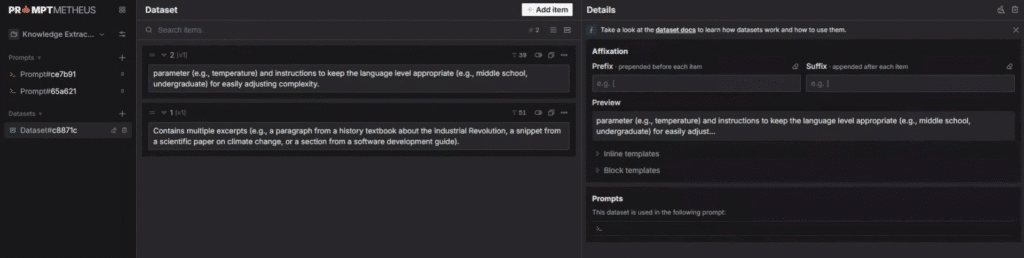

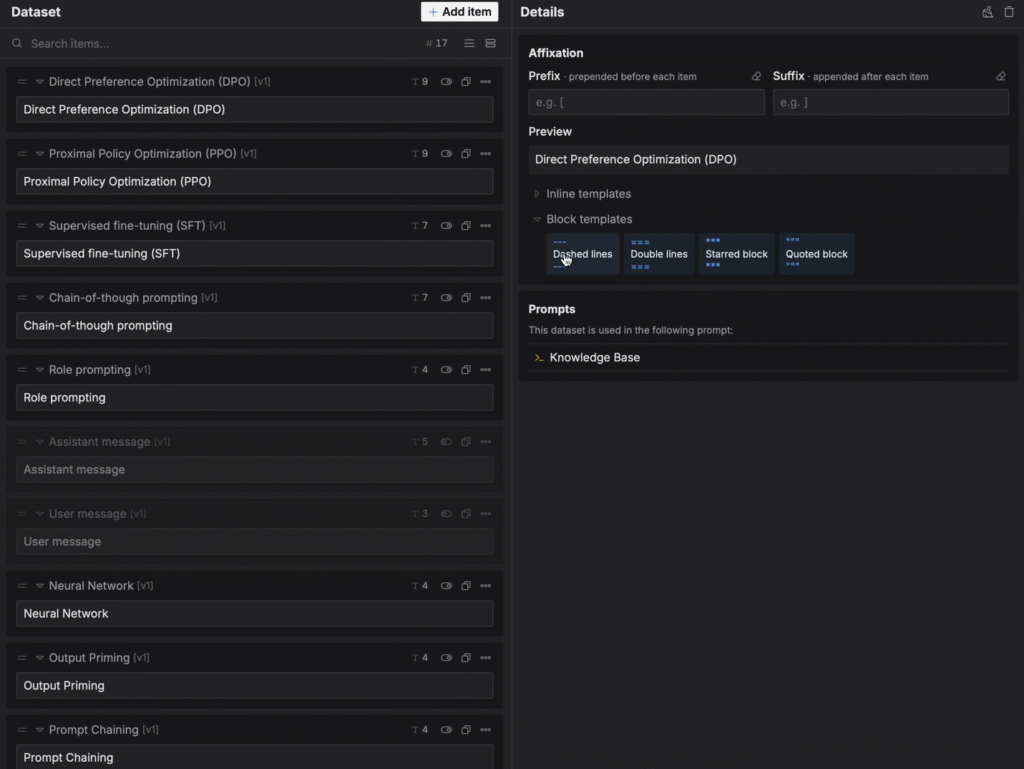

Blocks act as modular sections, including Text blocks for simple prose unique to each prompt and Data blocks for injecting reusable datasets.

You can reorder blocks via drag-and-drop, add new ones by hovering over “Add block” or the icon between blocks, and rename them by double-clicking their identifier (e.g., “Block# plus last 6 digits”).

Text blocks support code highlighting with Shiki for languages such as c, cpp, csharp, css, dart, go, html, java, json, jsx, kotlin, python, rust, svelte, swift, ts, tsx, and vue, enhancing technical prompt design.

Data blocks link to datasets, editable only in the dataset section, for variant testing across projects.

Variants allow multiple alternatives per block, displayed as “My Variant (v5)” if edited, with a version history accessible via right-click “History.” Only the selected variant is included in the compiled prompt, enabling seamless testing.

Add new variants by clicking the icon or duplicating existing ones, fostering experimentation without losing progress. This feature supports iterative refinement of text outputs.

Actions provide practical tools: Mute block deactivates blocks (grayed out, excluded from the prompt) for impact testing; Highlight variant filters completions to the chosen variant, dimming others; Copy to clipboard extracts block content (use the action to avoid extra linebreaks when pasting externally); and Execute for all variants runs all options simultaneously, saving time.

These actions, combined with block reordering and code formatting, optimize workflow in the prompt IDE.

For learners, this trio enhances grasping prompt engineering by offering structured experimentation, version control, and efficient testing, turning concepts into actionable skills for mastering language model prompts.

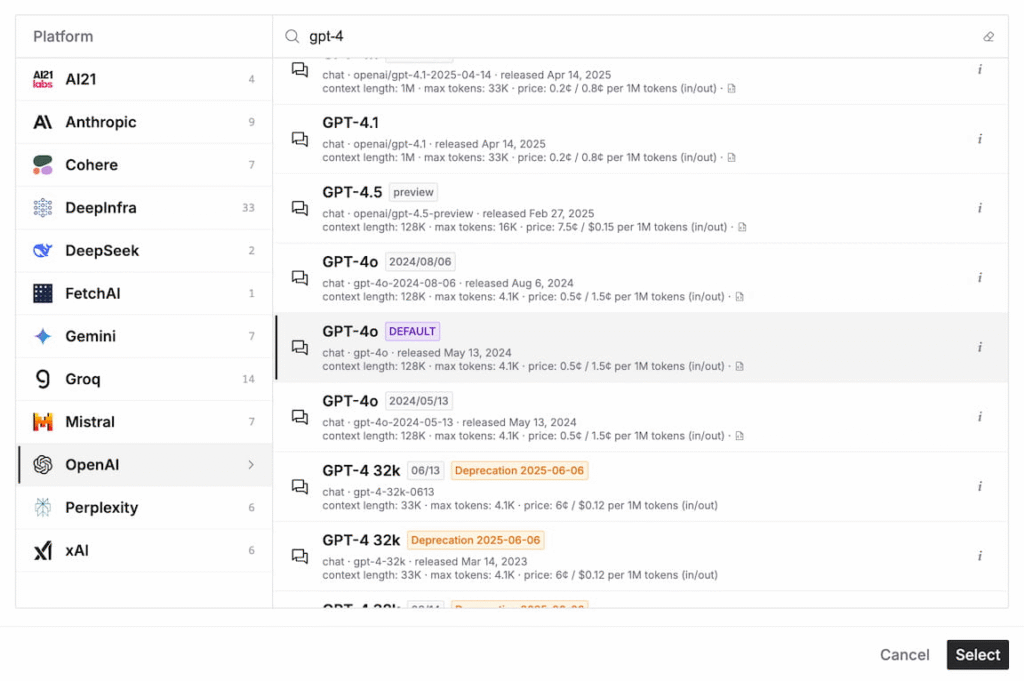

2. Support for 100+ LLMs

The prompt engineering IDE Promptmetheus supports over 100 large language models, making it a versatile prompt IDE for testing and optimizing text prompts.

By connecting to leading AI providers, it lets beginners compare how models process shared prompts, offering hands-on insight into prompt engineering nuances.

Experimenting within this prompt IDE builds practical skills without needing multiple platforms.

Below are the highlight models from key companies, showcasing their flagship offerings for prompt engineering tasks.

- OpenAI: GPT-4o and o1 stand out for their advanced reasoning and multimodal capabilities, ideal for complex inference tasks and high-quality completions in the prompt engineering IDE.

- Anthropic: Claude 4 Opus and Claude 3.5 Sonnet excel in natural language understanding and safety, perfect for nuanced prompt engineering experiments.

- DeepMind: Gemini 2.5 Pro and Gemini 1.5 Flash offer fast, high-performance processing, great for learners testing text prompts across diverse scenarios.

- DeepSeek: DeepSeek V3 and DeepSeek R1 provide cost-effective, powerful options for prompt engineering, balancing speed and quality.

- Mistral: Mistral Large and Ministral 8b deliver efficient, high-performing models for scalable prompt engineering tasks.

- xAI: Grok 3 and Grok 2 shine for their truth-seeking and conversational abilities, enhancing prompt IDE experimentation.

- Cohere: Command R+ and Command A focus on text generation and classification, simplifying prompt engineering for practical applications.

- Perplexity: Sonar Deep Research and Sonar Reasoning Pro are tailored for research-heavy prompt engineering, offering deep insights.

- AI21 Labs: Jamba 1.6 Large combines efficiency and performance, ideal for testing completions in the prompt IDE.

- FetchAI: asi1-mini supports lightweight, agent-based tasks, helping learners explore prompt engineering in automation.

- Groq: DeepSeek R1 Distill Llama 70B offers high-speed inference, perfect for rapid prompt engineering iterations.

- Deep Infra: Alibaba Qwen 2.5 and Meta Llama 3.3 provide robust, open-source options for flexible prompt engineering experiments.

- Microsoft: WizardLM and Phi 4 focus on task-specific efficiency, answering questions about optimizing prompts in the prompt IDE.

These models, accessible in Promptmetheus, let you get started with prompt engineering by comparing outputs and refining text, addressing your questions about how different language models perform.

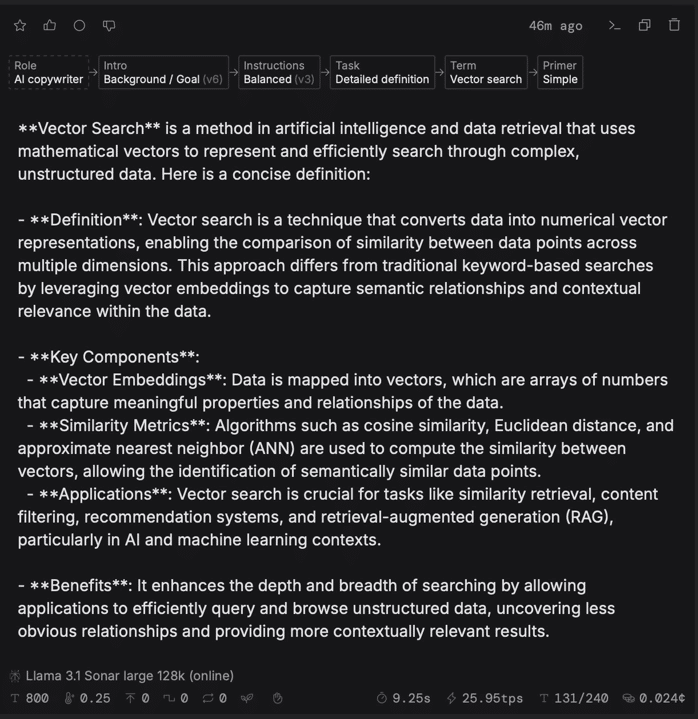

3. Prompt Testing and Optimization

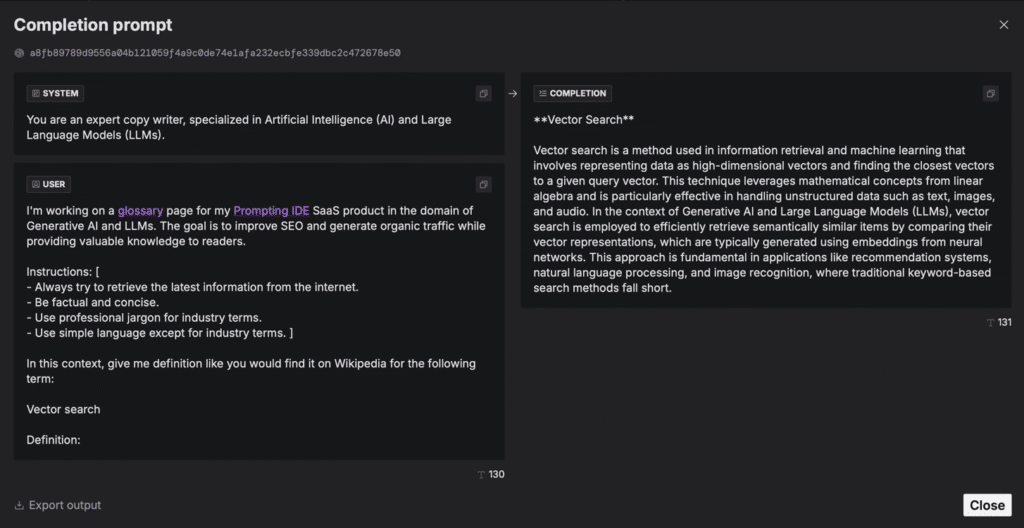

The prompt engineering IDE Promptmetheus shines with its Prompt Testing and Optimization features, a must-have for mastering prompt engineering.

It lets you test same prompts using different LLMs, rate completions, and track performance stats like token usage (e.g., T 256) and costs (e.g., $0.0064) and see the overall accuracy and effectiveness of the output given by different AI Models.

Learners can modify, edit prompts and instructions in real time to see how changes affect inference quality. It also supports executing multiple prompt versions, saving outputs, and analyzing emotional tones, turning prompt IDE experiments into practical skills for optimizing text with language models.

This hands-on approach clarifies what drives successful prompts, turning concepts into actionable knowledge.

4. Real-Time Collaboration

Team accounts offer shared workspaces for crafting shared prompts.

For students or study groups, this fosters collaborative learning.

Working together on prompts and building a library of examples helps you grasp prompt engineering through peer insights and teamwork.

5. Full Traceability and Versioning

This feature tracks every prompt change, allowing you to revert to earlier versions.

It’s perfect for learners, encouraging bold experimentation without risk.

Reviewing past prompts helps you understand what works, reinforcing your prompt engineering skills.

6. Prompt Chaining and AIPI Endpoints

Prompt Chaining and AIPI Endpoints are new features of Promptmetheus Archery app, enabling efficient handling of complex tasks.

Prompt chaining links multiple prompts, allowing one’s output to feed into the next for step-by-step processing.

AIPI Endpoints facilitate deploying these chained prompts into apps or workflows, ensuring seamless integration.

This combination enhances productivity for prompt engineering, making it ideal for refining processes and applying them in real-world projects within the prompt IDE.

API key syncing saves time by securing keys across devices. Quick-selection model configurations let you switch language models fast, perfect for comparing text outputs.

Enhanced prompt chaining simplifies complex workflows, like summarizing and generating completions. Data loaders and vector embeddings boost prompt engineering by integrating external data for richer prompts.

Automatic evaluations analyze inference costs and performance, answering learners’ questions about optimizing shared prompt. These tools make the prompt IDE a top choice for mastering prompt engineering.

7 External Data Integration

Add datasets or vector search context to prompts with this tool.

Learners see how external text boosts language model performance, like using sample emails for sentiment analysis.

This practical feature highlights real-world prompt engineering applications.

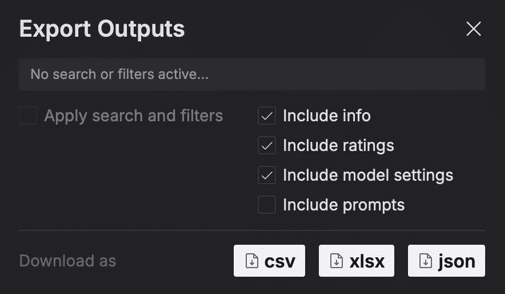

8. Code Highlighting and Export Options

Shiki highlighting clarifies code in prompts, and exports in .csv or .json support analysis.

Beginners can easily handle code-related prompts and study results outside the prompt IDE, connecting prompt engineering to broader data tasks.

9. Cost Calculation and Optimization

Track inference costs and minimize token usage with this feature.

Learners can experiment freely while learning cost implications, a practical lesson in prompt engineering for real-world projects.

10. Integration with Tools

Connect Promptmetheus to LiteLLM, Zapier, or LangChain for broader workflows.

Learners gain exposure to how prompt engineering fits into automation and app development, preparing for real-world applications.

Promptmetheus’s prompt engineering IDE answers your questions about crafting prompts with hands-on tools for experimentation, analysis, and collaboration.

It’s the perfect prompt IDE to address your questions and excel in prompt engineering, whether for automation or AI apps.

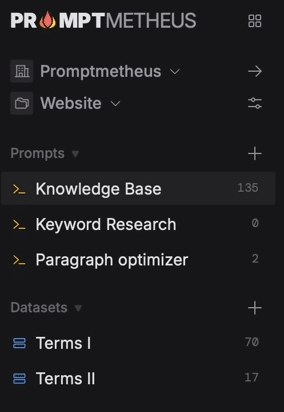

11. Project Organization

Organize prompts and datasets within projects for a tidy workflow.

Students can manage learning materials, like separating classification tasks, making it easy to revisit and study specific prompt engineering techniques.

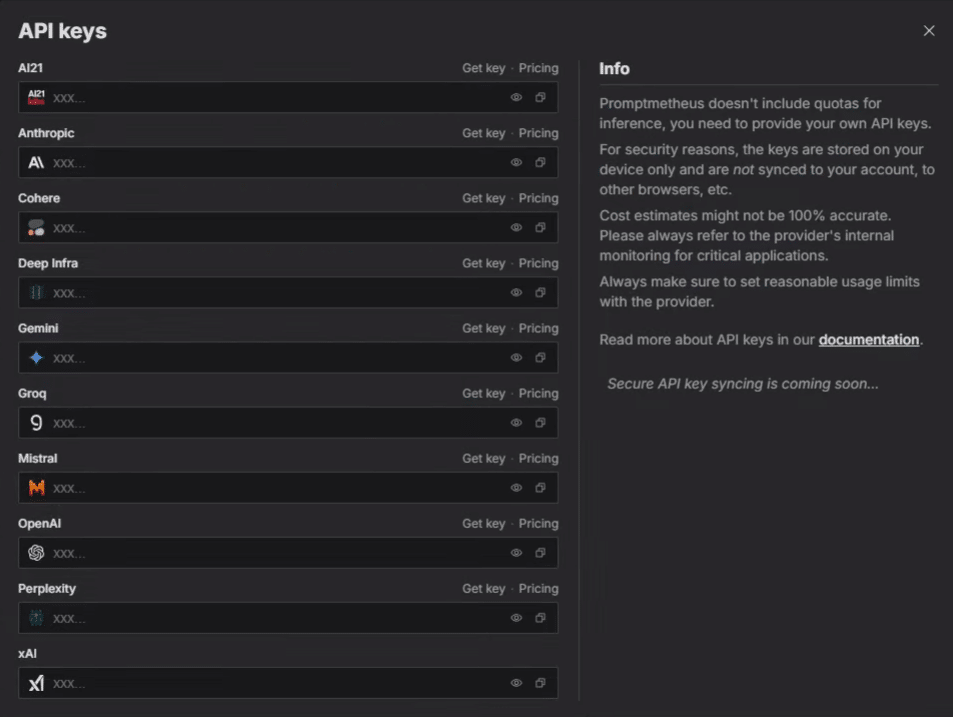

12. API Key Management

The prompt engineering IDE, Promptmetheus offers a solid API Key Management feature, perfect for keeping your prompt engineering secure.

You can get the API keys for each model you that you want to sue for free from their main website. Promptmetheus allows you to safely store API keys locally, ensuring they stay safe while you work with language models.

You can easily access and manage keys for different text tasks, making it simple to switch between projects.

This helps learners handle keys without hassle, avoiding mix-ups or exposure. It supports secure testing of completions and inference, giving you control over who accesses your prompt IDE. It’s a handy way to focus on learning without worrying about key security.

13. Security and Compliance

With SSL/TLS encryption and trusted providers, this feature ensures data safety. Learners can focus on prompt engineering without privacy worries, building trust in professional tools.

Getting Started with Promptmetheus

Whether you are using an SEO-Optimized content generator or AI-art generation app, You will have to describe to it’s in-built AI model what exactly do you want to get the perfectly desired output.

That’s where prompt engineering comes in, and Promptmetheus is an excellent source for learning, how to craft that perfect prompt.

Here’s how to begin.

The platform offers two paths: a free playground for testing ideas and the full-featured Archery IDE for advanced projects. I’ve used both—here’s what works best at each stage.

Exploring the Free Playground Version

The playground lets you experiment with basic prompts using OpenAI models. It’s perfect for prototyping, but note the limits:

- No cloud sync or team collaboration

- Max 5 saved prompts at once

- Basic analytics only

For quick tests, it’s unbeatable. One pro tip: Use the Forge template library to speed up drafts before committing to paid plans.

Upgrading to the Full-Featured Archery IDE

For serious projects, Archery unlocks game-changing tools. I migrated my workflow in minutes by exporting playground prompts as JSON. The upgrade includes:

| Feature | Playground | Archery IDE |

|---|---|---|

| Model Support | OpenAI only | 100+ LLMs |

| Collaboration | None | Real-time editing |

| Templates | 10 basic | 150+ pre-built |

Team plans shine with shared libraries. As one user told me:

“The sentiment analysis templates cut our onboarding time by 70%.”

Watch costs—at $0.0035/1k tokens, high-volume output adds up fast. Start small, validate results, then scale.

Promptmetheus Review: Pros and Cons

Pricing

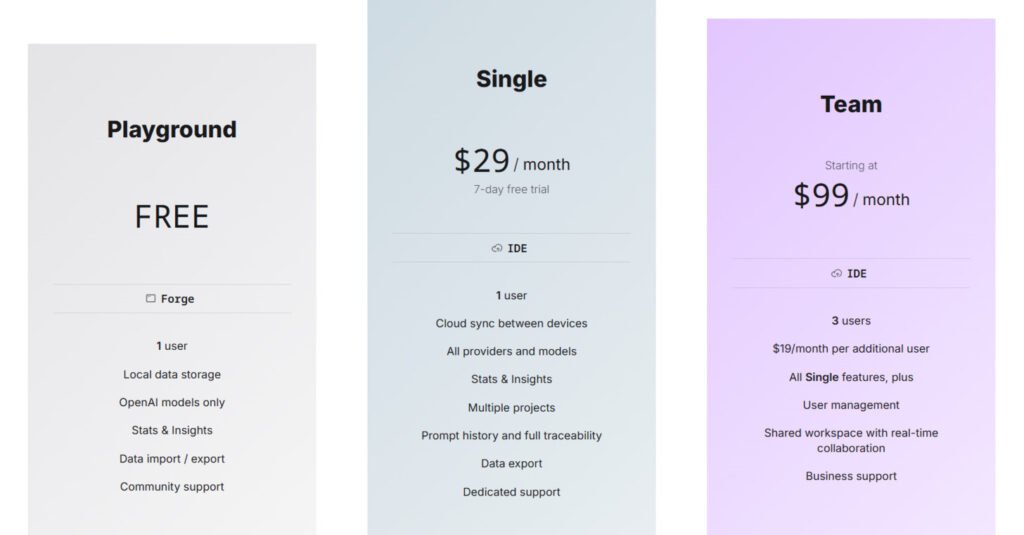

The Promptmetheus offers simple pricing structures:

Playground

Free tier offers 1 user with local data storage, OpenAI models only, stats & insights, data import/export, and community support. Ideal for beginners testing prompt engineering.

Single

$29/month (7-day free trial) includes 1 user, cloud sync, all providers and models, stats & insights, multiple projects, prompt history, full traceability, data export, and dedicated support. Great for individuals advancing in prompt IDE skills.

Team

$99/month (plus $19/month per additional user) supports 3 users, all Single features, user management, shared workspace with real-time collaboration, and business support. Perfect for teams mastering prompt engineering.

Annual pricing isn’t listed, but monthly plans offer flexibility for scaling language model projects.

Use Cases for Promptmetheus

Businesses are leveraging AI to streamline operations—here’s how Promptmetheus delivers real results.

From marketing to HR, teams achieve measurable improvements by systematizing their workflows.

I’ve seen companies transform manual tasks into automated processes with remarkable efficiency.

1. Automating Repetitive Tasks with Reliable Prompts

One marketing team generated 12,000 personalized emails weekly using templated prompts.

The output maintained brand voice while adapting to customer segments.

Another company auto-screened 800 resumes with 92% accuracy, saving HR teams 15 hours weekly.

Customer support saw ticket resolution times drop by 65% through AI-assisted responses.

E-commerce stores dynamically created product descriptions in 14 languages—all from a single master prompt.

The key?

Designing prompts that handle edge cases while delivering consistent output.

2. Enhancing AI-Driven Workflows and Integrations

Promptmetheus connects with business apps like Slack and Airtable through Zapier. One user built a pipeline that classified support tickets, logged them, and alerted teams—all automatically.

These integrations turn standalone prompts into complete systems.

For developers, the API enables custom workflows.

I helped a client deploy an AI-powered content moderation system processing 50k requests daily.

Remember: always validate input for mission-critical tasks. One missing filter caused a financial firm’s chatbot to share sensitive data.

Whether you’re scaling operations or refining daily tasks, these examples show what’s possible. The right prompts—properly engineered—become force multipliers for any team.

Conclusion: Promptmetheus Review

Raamish’s Take

Promptmetheus is hands down the best resource for mastering prompt engineering for any task.

It is a specialized prompt engineering IDE for developers, AI engineers, and teams developing LLM-powered applications.

It supports 100+ LLMs, including Claude 4, Grok 3, and GPT-4.1, composing prompts as modular LEGO-like blocks for context, tasks, or samples.

Testing tools like datasets and completion ratings assess prompt reliability, while analytics provide performance insights.

Optimization refines prompt chains for consistent outputs, reducing errors. Real-time collaboration in shared workspaces builds prompt libraries.

Traceability logs prompt design history, cost estimation calculates inference costs, and data export supports multiple formats.

Prompt chaining integrates external data via vector search or API endpoints, ensuring precision and scalability for AI-driven projects.

Prompt engineering is one of the most important skills in generative AI and the digital landscape beyond. Promptmetheus is an excellent resource to form your AI directing capabilities.

For anyone looking to grasp prompt engineering, Promptmetheus offers a powerful solution with measurable benefits.

If your monthly LLM spend exceeds $500, it’s a smart investment to master skills efficiently—I cut my time by 60%, a gain you can apply to accelerate learning and execution with AI. It’s poised to become the industry standard, like GitHub for developers, though basic users might prefer simpler model playgrounds.

Promptmetheus excels as a prompt engineering IDE with a robust feature set. Prompt Testing lets you test variants and track real-time stats for refinement.

Learning is enhanced with modular Text blocks and Data blocks, supported by Shiki code highlighting (e.g., Python). Execution offers “Execute for all variants,” “Mute block,” and “Highlight variant,” with drag-and-drop and version history for precision across 100+ language models (OpenAI, Anthropic, Meta etc.).

Prompt Composition with LEGO-like Blocks allows reordering, adding via “Add block,” and renaming IDs (e.g., “Block# digits”), with code support for c, cpp, etc. Real-Time Collaboration, Full Traceability, Prompt Chaining, External Data Integration, Cost Optimization, Project Organization, API Key Management, Security, and Tool Integration (e.g., Zapier) round it out.

It’s not for everyone, but for those eager to master prompt engineering, it’s a game-changer. It balances depth and accessibility, cutting learning curves as AI skills rise. Worth exploring to step up your Generative AI game!

Frequently Asked Questions

1. What is prompt engineering, and why is it important?

Prompt engineering is the process of designing and refining instructions to guide large language models (LLMs) effectively. It’s crucial because well-crafted prompts improve model performance, ensuring accurate and relevant outputs for tasks like content creation, data analysis, and automation.

2. How does Promptmetheus support real-time collaboration?

Promptmetheus offers real-time collaboration features, allowing teams to work together on shared prompts, iterate quickly, and provide feedback seamlessly. This is especially useful for teams managing complex workflows or multiple projects simultaneously.

3. Can I test prompts before deploying them?

Yes, Promptmetheus includes a testing environment where you can experiment with prompts, analyze outputs, and refine instructions. This ensures your prompts are reliable and optimized for specific tasks before integration into apps or workflows.

4. Does Promptmetheus support multiple large language models?

Absolutely. Promptmetheus is compatible with over 100 large language models, giving you flexibility to choose the best model for your needs. It also integrates with inference APIs for seamless deployment.

5. Is there a free version of Promptmetheus available?

Yes, you can start with the free playground version to explore basic features. For advanced capabilities like full traceability and modular prompt design, upgrading to the Archery IDE is recommended.

6. How does Promptmetheus improve prompt performance?

Promptmetheus provides advanced tools for prompt optimization, including context management, performance statistics, and iterative testing. These features help you create efficient and reliable prompts tailored to your specific use cases.

7. What are some common use cases for Promptmetheus?

Promptmetheus is ideal for automating repetitive tasks, enhancing AI-driven workflows, and integrating with apps for content generation, data analysis, and more. It’s a versatile tool for both individual and team-based projects.